Collaborators

Project Brief

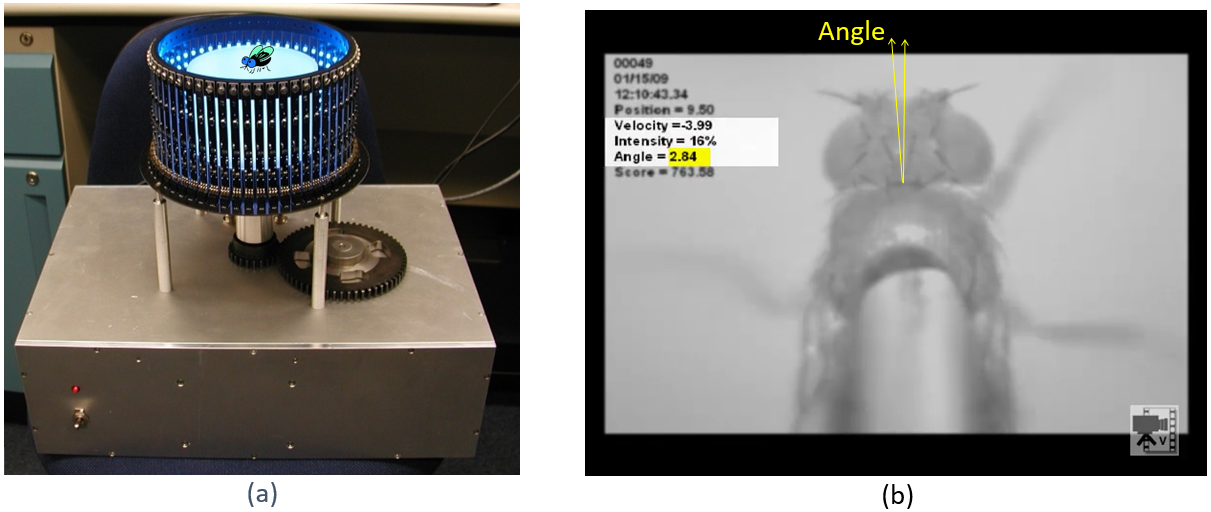

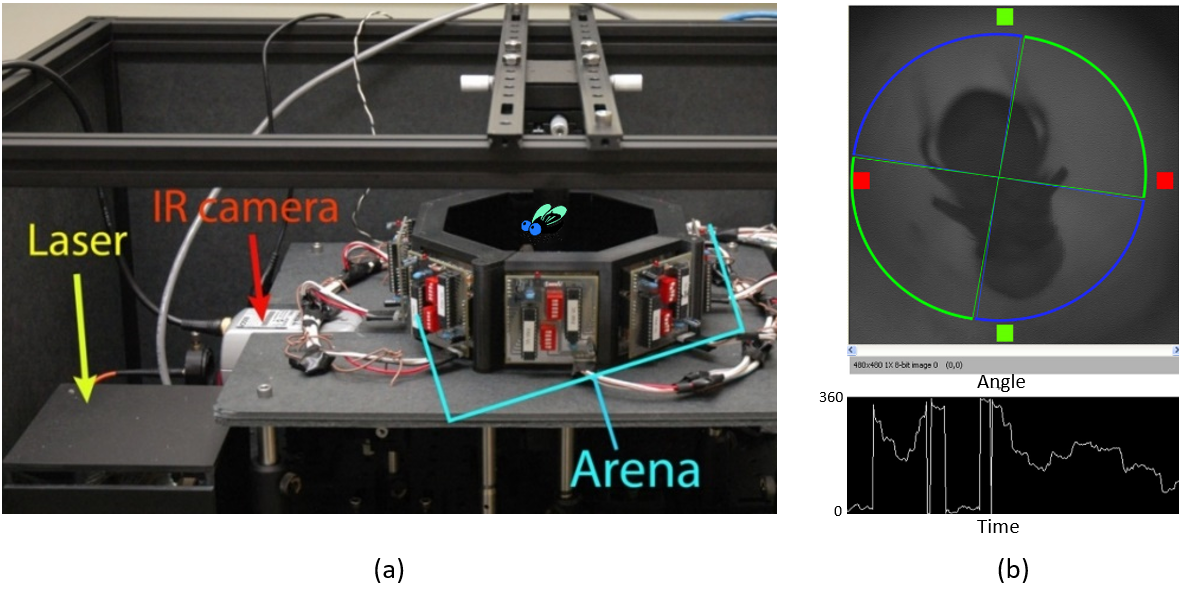

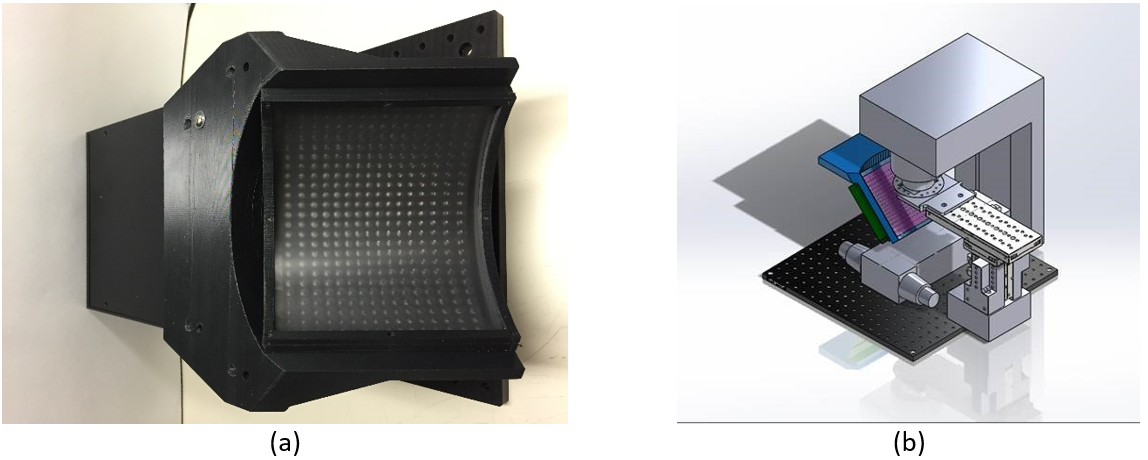

SPIS has been collaborating with NICHD's section on neuronal connectivity and NIBIB to develop one-of-kind virtual-reality (VR) behavioral systems to assess the functions of Drosophila visual circuits. These VR systems are comprised of custom electronics, opto-electronics, imaging systems, mechanical hardware, and software. Since vertebrates share similar visual functions and neural circuit architectures, the research will provide a better understanding of how these systems receive, process, and interpret visual stimuli associated with motion and color. SPIS developed custom instruments to facilitate the identification of specific neural circuit elements (i.e., via neuronal connection manipulations) that detect motion and distinguish colors through a battery of behavioral tests. Another instrument is currently under development to generate ultraviolet visual stimuli while observing the activity of visual neurons with two-photon microscopy calcium imaging. By synchronizing the stimuli and the calcium imaging, specific neural pathways will be detected and mapped.

Awards:

- 2016 NIH Director’s Award: Using virtual-reality systems to dissect neural circuits of color-vision

- 2012 NICHD Scientific Director’s Intramural Award: Integration of Chromatic Information in the Higher Visual Center of Drosphila